I will try to list the steps (with screenshots) of setting up hadoop (stable release 1.2.1) on Windows 7 (Single node cluster).

For an introduction to Hadoop, refer the following article

Installing JDK 1.6+

I have installed JDK 1.7.0_55 for the setup.

This can be installed from the Oracle JDK download page @ http://www.oracle.com/technetwork/java/javase/downloads/index.html

After setup, put the JDK folder path entry in the Environment variables (system) in a new JAVA_HOME field.

[Computer right click > Advanced System Settings > Environment Variables > New > Put JAVA_HOME entry and value as -- D:\Java\jdk1.7.0_55 (where your JDK is installed)]

Installing and setup Cygwin (with openssh)

Refer following article for step by step guide: http://mylearningcafe.blogspot.com/2014/05/installing-and-seting-up-cygwin-with.html

Installing and setting up Hadoop

Edit <Hadoop Home>/conf/hadoop-env.sh to set Java home

export JAVA_HOME=D:\\Java\\jdk1.7.0_55 (note the double back slash \)

Update <Hadoop Home>/conf/core-site.xml. with the below xml tag to setup hadoop file system property<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:50000</value>

</property>

</configuration>

Update <Hadoop Home>/conf/mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:50001</value>

</property>

</configuration>

Create two folders "data" and "name" under /home/<user> and do chmod 755 on both.

e.g. /home/nlachwan/data and /home/nlachwan/name

Update<Hadoop Home>/conf/hdfs-site.xml

<configuration>

<property>

<name>dfs.data.dir</name>

<value>/home/nlachwan/data</value> <<Note: please put your username instead of nlachwan>>

</property>

<property>

<name>dfs.name.dir</name>

<value>/home/nlachwan/name</value> <<Note: please put your username instead of nlachwan>>

</property>

</configuration>

Note: You could do dos2unix for the above files (if edited in Windows). However, it didn't makeany difference for me (I didn't do it).

After setup, put the hadoop folder path entry in the Environment variables (system) in the PATH

[Computer right click > Advanced System Settings > Environment Variables > PATH entry and value as -- C:\cygwin64\home\nlachwan\hadoop-1.2.1\bin (where your hadoop is installed)]

Format HDFS:

In the cygwin terminal, execute hadoop to confirm its working

nlachwan@NLACHWAN-IN ~

$ hadoop

Warning: $HADOOP_HOME is deprecated.

Usage: hadoop [--config confdir] COMMAND

where COMMAND is one of:

namenode -format format the DFS filesystem

secondarynamenode run the DFS secondary namenode

namenode run the DFS namenode

datanode run a DFS datanode

dfsadmin run a DFS admin client

mradmin run a Map-Reduce admin client

fsck run a DFS filesystem checking utility

fs run a generic filesystem user client

balancer run a cluster balancing utility

oiv apply the offline fsimage viewer to an fsimage

.

.

.

In the cygwin terminal, execute hadoop namenode -format

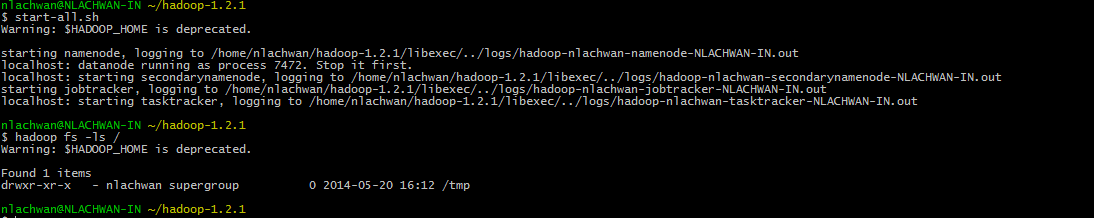

Execute stop-all

Execute start-all

If you see an ssh connection refused error, follow all the steps from "Setup authorization keys"

Once hadoop is running, test hadoop file system by executing "hadoop fs -ls /"

You can also check the following URLs:

http://localhost:50070/

http://localhost:50030/

For an introduction to Hadoop, refer the following article

We will need the following for the same:

- JDK 1.6+ installed on Windows box

- Cygwin (with openssh)

- Hadoop 1.2.1

Installing JDK 1.6+

I have installed JDK 1.7.0_55 for the setup.

This can be installed from the Oracle JDK download page @ http://www.oracle.com/technetwork/java/javase/downloads/index.html

After setup, put the JDK folder path entry in the Environment variables (system) in a new JAVA_HOME field.

[Computer right click > Advanced System Settings > Environment Variables > New > Put JAVA_HOME entry and value as -- D:\Java\jdk1.7.0_55 (where your JDK is installed)]

Installing and setup Cygwin (with openssh)

Refer following article for step by step guide: http://mylearningcafe.blogspot.com/2014/05/installing-and-seting-up-cygwin-with.html

Installing and setting up Hadoop

- Download hadoop-1.2.1 from http://mirror.metrocast.net/apache/hadoop/common/hadoop-1.2.1/ to your local machine

- Use winzip to get the .tar file.

- Use winzip to get the hadoop folder extracted. Place the folder in the Cygwin directory under <Cygwin_install_dir>\home\<user>

- e.g. I have installed under C:\cygwin64\home\nlachwan. Thus my hadoop folder is C:\cygwin64\home\nlachwan\hadoop-1.2.1

Edit <Hadoop Home>/conf/hadoop-env.sh to set Java home

export JAVA_HOME=D:\\Java\\jdk1.7.0_55 (note the double back slash \)

Update <Hadoop Home>/conf/core-site.xml. with the below xml tag to setup hadoop file system property<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:50000</value>

</property>

</configuration>

Update <Hadoop Home>/conf/mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:50001</value>

</property>

</configuration>

Create two folders "data" and "name" under /home/<user> and do chmod 755 on both.

e.g. /home/nlachwan/data and /home/nlachwan/name

Update<Hadoop Home>/conf/hdfs-site.xml

<configuration>

<property>

<name>dfs.data.dir</name>

<value>/home/nlachwan/data</value> <<Note: please put your username instead of nlachwan>>

</property>

<property>

<name>dfs.name.dir</name>

<value>/home/nlachwan/name</value> <<Note: please put your username instead of nlachwan>>

</property>

</configuration>

Note: You could do dos2unix for the above files (if edited in Windows). However, it didn't makeany difference for me (I didn't do it).

After setup, put the hadoop folder path entry in the Environment variables (system) in the PATH

[Computer right click > Advanced System Settings > Environment Variables > PATH entry and value as -- C:\cygwin64\home\nlachwan\hadoop-1.2.1\bin (where your hadoop is installed)]

Format HDFS:

In the cygwin terminal, execute hadoop to confirm its working

nlachwan@NLACHWAN-IN ~

$ hadoop

Warning: $HADOOP_HOME is deprecated.

Usage: hadoop [--config confdir] COMMAND

where COMMAND is one of:

namenode -format format the DFS filesystem

secondarynamenode run the DFS secondary namenode

namenode run the DFS namenode

datanode run a DFS datanode

dfsadmin run a DFS admin client

mradmin run a Map-Reduce admin client

fsck run a DFS filesystem checking utility

fs run a generic filesystem user client

balancer run a cluster balancing utility

oiv apply the offline fsimage viewer to an fsimage

.

.

.

In the cygwin terminal, execute hadoop namenode -format

Execute stop-all

Execute start-all

If you see an ssh connection refused error, follow all the steps from "Setup authorization keys"

Once hadoop is running, test hadoop file system by executing "hadoop fs -ls /"

You can also check the following URLs:

http://localhost:50070/

http://localhost:50030/

Your Hadoop is setup. Have fun !!

Nice article, Thanks for sharing !!

ReplyDeleteNice article, Thanks for sharing !!

ReplyDeleteKeep it up sir

ReplyDelete